用户转账

最近在看以太坊代码,对以太坊交易的运作和最终写入DB的过程很感兴趣,尝试去分析了一些,这里做一下简单的记录

命令

此处是在geth中的整体过程

- 解锁账号

1 | personal.unlockAccount(eth.accounts[0],passwd) |

- 发送交易

1 | eth.sendTransaction({from:eth.accounts[0],to:eth.accounts[1],value:web3.toWei(1,"ether")}) |

- 挖矿启动

1 | web3.miner.start() |

- 挖矿停止

1 | web3.miner.stop() |

接下来就能看到账号0中的1个eth转移到了账号1中

流程

先给个整体结论

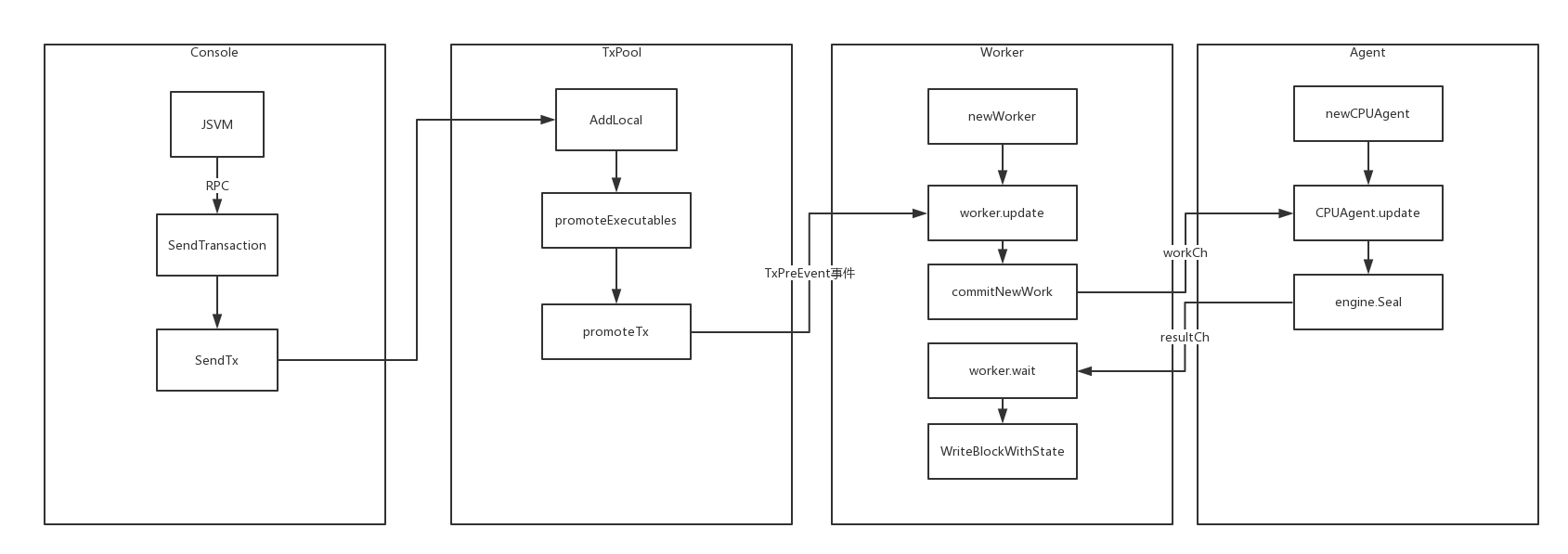

这里简单看下流程图

可以看到以太坊的架构是非常清晰的,简单来说这个geth console中的转账会涉及到4个部分代码的调用,internal,txpool,worker,agent。

- internal:jsvm通过RPC实际调用的以太坊内部的一些方法,发送交易的初始方法就在这里被触发

- txpool:txpool里面存放的是还未被打包的交易以及等待被打包的交易

- worker:区块产生者,将txpool中等待打包的交易包装成工作发送给挖矿代理生成区块并且提交区块并广播

- agent:挖矿工作者,根据共识引擎以及worker分配的工作填写实际区块的内容,并且将结果返回给worker

可以看到,整体区块的生成主要流程是在worker上,整体的线性的工作安排是十分有趣的,接下来我们就可以详细分析代码,是很枯燥的部分,对此不感兴趣的同学可以略过不看

代码分析

交易发送

1 |

|

1 | //提交交易到交易池中并返回结果log |

1 |

|

交易集合打包

在miner进程初始化时,会启动一个newWorker的初始化

1 | // 交易池更新,提交网络 |

此处几个遗留问题:

- ChainHeadEvent/ChainSideEvent/TxPreEvent 分别是什么事件?

- commitNewWork()分析

- commitTransactions()分析

1 | func (self *worker) update() { |

挖矿

1 | func (self *CpuAgent) mine(work *Work, stop <-chan struct{}) { |

1 | //实现共识引擎中的seal方法,尝试去找到一个Nonce满足区块难度需求 |

1 | //实际的POW挖矿算法,根据总之去寻找一个正确的nonce结果 |

提交网络

1 |

|

1 | //提交新的工作 |